Deepfake AI: Can You Actually Trust What You See Online?

In a world where digital media is important, the arrival of Deepfake AI technology has raised a big question: Can we trust what we see online?

In this blog, we'll explore the world of Deepfake AI technology, how it has developed, what it is used for, its negative aspects, and what the future might look like.

Deepfake AI technology has improved in the past few years, making it possible to create realistic videos and audio recordings that show people doing or saying things they never actually did. This technology uses AI, and deep learning methods, to change and create visual and audio content.

As a result, Deepfake AI can be very believable, making it hard for most people to tell the difference between real and fake content.

The Impact of Deepfake AI on Information Consumption

Deepfake AI technology has a big effect on how we consume information. It can change entertainment, education, and creative fields by allowing new ways to express ideas and tell stories. For example, filmmakers can use deepfakes to bring historical figures back to life or to create visual effects. However, there’s the darker side of deepfake too. It can be used to share wrong information, create fake news, and damage reputations.

Being capable of detecting counterfeit videos and producing recordings makes human beings have low trust in digital media. As individuals learn how easy it is to manipulate videos and sound recordings, they start doubting every image and voice they come across hence making it hard to know the truth from a lie. This distrust has many negative social impacts e.g., reducing the credibility of information sources or impeding meaningful public interactions thus undermining what reality is.

How does Deepfake AI technology work?

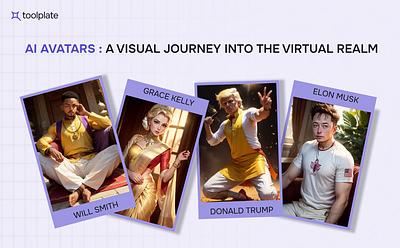

Deepfake AI technology refers to advanced AI algorithms used to create or change videos and pictures in a way that can trick people. It usually uses tools like FaceSwap AI to put one person's face on another person's body in videos or pictures. This technology is based on a strong knowledge of machine learning and neural networks, often using Generative Adversarial Networks (GANs).

Deepfake AI technology works by looking at and learning from the ways of facial movements and expressions. It then applies these patterns to another face, creating a seemingly authentic video. This process involves complex algorithms that can analyze and replicate minute facial expressions, making the result alarmingly realistic.

Applications of Deepfake AI Bots

Deepfake AI bots can do more than just entertain. Companies are looking into using them for custom marketing plans and as virtual helpers, while schools are trying out deepfake-powered interactive lessons.

These Deepfake AI bots can be used in both positive and negative ways, affecting various sectors:

1. Entertainment

Deepfakes can create funny face swaps, dub videos in different languages, and enhance film production with voice cloning and virtual actors.

2. Accessibility

AI bots can translate sign language in real-time, create voice assistance for people with disabilities, and personalize education through interactive avatars.

3. Public awareness

Deepfakes can make real-looking videos to teach safety, use famous people to help share important messages, and bring back to life old-time figures in digital form.

4. Innovation

AI-powered customer service bots can personalize conversation, provide 24/7 support, and show how products work in online stores.

Ethical Concerns of Deepfake

Even though deepfakes can do good things, we must remember the bad they can cause. They can be used to spread lies about politics or bother people in harmful ways, which raises big questions about what's right and how they affect society.

The good things deepfakes could do are not as important as the risks they bring. People with bad intentions can use them to share false information, hurt people's names, and even change the results of elections. Deepfakes can make fake videos that look bad, damage reputations, and cause trouble in communities.

Deepfake AI Threats

- Misinformation: Deepfakes can make fake news videos that quickly spread on social media, making people doubt real news sources and manipulating public opinion.

- Cybercrime: Criminals can use deepfakes to pretend to be someone else and trick them into revealing personal details or sending money.

- Social Engineering: Harmful people can use deepfakes to trick others into doing things like voting for a specific person or donating to a fake charity.

- Reputational Damage: Deepfakes can create fake embarrassing videos of people, damaging their careers and personal lives.

Case Studies: Deepfake AI Examples

- Taylor Swift Deepfake case: Taylor Swift became the center of controversy because fake pornographic pictures of her are being shared on the internet. This shows the difficulties that tech companies and groups against abuse are dealing with. The issue has become popular on social media, especially on the X platform, where the hashtag #ProtectTaylorSwift is trending. According to some sources, The American singer-songwriter is very angry and may take legal action.

- Rashmika Mandanna - Indian Actress: A viral video of Indian actress Rashmika Mandanna is also a case of deepfake. This video got more than 2.4 million views and showed a fake version of Mandanna entering into the elevator. However, the original footage was actually of Zara Patel, a British influencer. The deepfake technology was used to put Mandanna's face on Patel's body in the video.

- Deepfake of Volodymyr Zelensky: In the fight between Ukraine and Russia, a deepfake fake video showed Ukraine's President Zelensky telling people to surrender to Russia. This shows how deepfake technology can be used in big fights between countries.

- Manoj Tiwari's Political Campaign: During the Delhi elections, a deepfake video showed, a political leader, Manoj Tiwari, speaking in languages he doesn't actually know. The video was shared in thousands of WhatsApp groups and affected the election.

- Belgian Premier Sophie Wilmès Deepfake: A clip showed Belgian Leader Sophie Wilmès talking about how the COVID-19 crisis was caused by harm to the environment. This clip, made by Extinction Rebellion, used deepfake technology to change an old speech.

How to detect Deepfake Content?

Identifying Deepfake AI-generated content is hard because the technology making them is getting really good. However, there are still several ways to spot deep fakes:

1. Check for Irregularities in Facial Features

Deepfakes often have slight distortions in facial features. Look for any unnatural movements or inconsistencies, such as odd blinking patterns, misaligned eyes, or lips that don't sync perfectly with the audio.

2. Examine Skin Texture

The skin texture in deepfake videos can sometimes appear too smooth or inconsistent. This is particularly noticeable around the edges of the face.

3. Analyze the Audio

In some deepfakes, the voice might not sound natural, or there might be discrepancies in the tone and cadence. Pay attention to whether the voice matches the person’s usual speech patterns.

4. Check the Background and Context

Sometimes the background or context of the video might give away a deepfake. This includes things like anachronistic elements or backgrounds that don’t match the historical context of the person speaking.

5. Digital Forensic Tools

There are also digital forensic tools available that can analyze videos for signs of being a deepfake. These tools might look for inconsistencies at a pixel level or analyze the video for signs of digital manipulation.

6. Fact-Checking and Source Verification

Cross-reference the video with reliable sources. If the video is claiming to be of a well-known personality, check their official social media profiles or reliable news sources for any mention of the video.

7. Machine Learning Detection Software

Some companies and researchers are developing machine learning algorithms specifically designed to detect deep fakes. These tools can sometimes identify subtle signs that human observers might miss.

8. Educational Resources

Some organizations provide training and educational resources to help people better understand and identify deepfakes. Engaging with these resources can improve your ability to spot deepfakes.

End of Deepfake AI: Government rules and regularisation

Governments and organizations everywhere are trying to find ways to control and lessen the effects of this deepfake tech by making laws and algorithms. Here's a quick look at some important actions being taken:

1. United States

The United States has taken steps to address the problems caused by deepfakes. The National Defense Authorization Act (NDAA) makes it necessary for the Department of Homeland Security to release yearly reports about the possible dangers of deepfakes, such as foreign attempts to influence and scams. Additionally, the Identifying Outputs of Generative Adversarial Networks Act requires research into deepfake technology and the creation of standards and deepfake detection capabilities.

2. European Union

In the European Union, there are discussions about incorporating measures against deepfakes into existing frameworks like the General Data Protection Regulation (GDPR). The EU has not directly addressed deepfakes in legislation, but there's a focus on online disinformation, including deepfakes, through measures like the self-regulatory Code of Practice on Disinformation for online platforms.

3. Australia and Other Regions

In Australia, existing laws like the Privacy Act and the Online Safety Act provide a framework for regulating deepfakes, particularly in contexts like revenge pornography and cyber abuse material. The Australian Government is also discussing tighter rules under IT laws to combat deepfakes.

Other countries are also looking into rules to manage the spread and effects of deepfakes, trying to protect people and society while not limiting freedom of speech and creativity.

Conclusions

Deepfake AI is a powerful technology that can change how we do things in entertainment, education, and advertising, but it can also be used harmfully. As we continue to use this technology, it's important to create strong protections against bad deepfakes, teach people how to recognize them, and encourage the proper use of this technology.